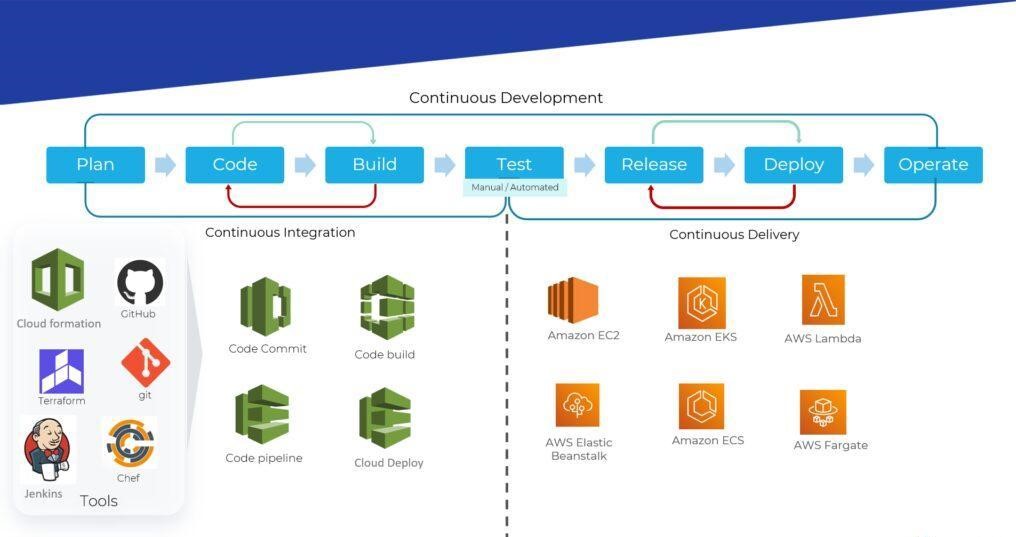

Continuous integration and continuous deployment (CI/CD) pipeline is a strategy that helps integrate the work of many people, quickly and precisely, into one cohesive product. It includes the series of steps that need to be performed to deliver a new version of software. It is focused on enhancing software delivery via automation throughout the software development lifecycle (SDLC). By automating CI/CD during development, production, monitoring and testing, higher quality code can be developed faster.

True, every step of a CI/CD pipeline can be executed manually, but it is automation that shows its true value. Meanwhile, pipelines are predefined tasks that decide what needs to be completed and when. Tasks are usually executed in parallel to accelerate delivery. A typical CI/CD pipeline includes stages where code is pushed to the repository and stored, code changes trigger the build, which is tested and then deployed to the production environment.

Enabling CI/CD pipeline for container-based workloads

- CI/CD, a DevOps strategy: CI/CD is a DevOps tactic, in fact it is the backbone of the DevOps methodology, which brings together developers and IT operations teams to deploy software. CI/CD facilitates DevOps teams with a single repository to keep automation tools and store work so that the code can be continuously integrated and tested for quality.

- Containerization, a DevOps tool: In containerization, all the components of an application – the software, its environment, dependencies and configuration – are bundled into a single isolated unit called a container. Each unit can be deployed in its own space on a shared operating system, on any computing environment, on-premise or on the cloud. Containers are lightweight and portable, and very conducive to automation. Containers and orchestration tools facilitate CI and CD.

- Docker, a containerization solution: Docker is a containerization solution used widely in DevOps and workflows. It is an open source platform that allows developers to quickly and easily build, deploy, update, run and manage containers. Docker makes it easy to decouple apps from their surroundings and it also contains a collection of container images that can be used for development.

Common use cases for containerization workloads

- Modernizing legacy application development practices to container-based platforms

- Moving pipelines and workflows across multiple microservices and applications with ease

- Providing DevOps support for CI/CD

- DevOps enables compliance with industry standards and organizational policies while shipping releases faster to production.

- Minimizing errors during the build, deploy, test, and release process of a new software release

- Providing easier deployment of repetitive tasks.

CI/CD pipeline architecture

DevOps with containers: The workflow

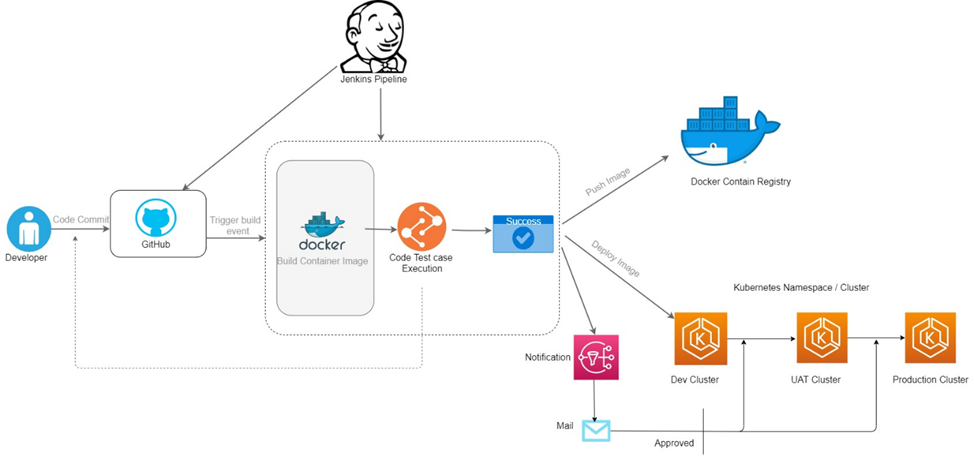

- After coding, developers push the code to a shared repository such as GitHub. Frequently merging the code and validating it is one way to ensure CI is error-free. To start the process, a GitHub webhook triggers a Jenkins project build. When code changes are made and committed to the repository, the pipeline gets activated. It downloads the code and triggers a build process.

- In this step, the code is compiled, artifacts are built, dependencies are sorted out and stored in the repository. Environments are created, containers are built and images are stored for roll out. This is followed by the testing processes. The Jenkins build job uses a dynamic build agent in AWS Elastic Kubernetes Service (EKS) to perform a container build process.

- A container image is created from the code in source control and is then pushed to an AWS/Docker Container Registry.

- Using the process of CD, Jenkins deploys an updated container image to the Kubernetes cluster.

- The web application uses Dynamo DB as its back end. Both Dynamo DB and AWS EKS report metrics to the AWS Monitor.

- A Grafana instance provides visual dashboards of the application performance based on the data from AWS Monitor.

Containerization infrastructure and configuration as code

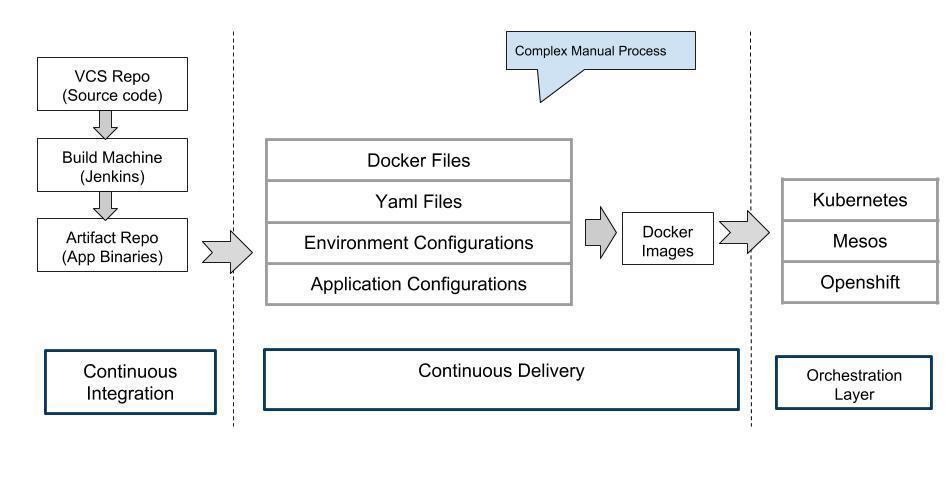

The true power of containers becomes visible when orchestrating with Kubernetes and DevOps pipelines can be automated in better ways. Kubernetes is a portable open source platform used to manage containerized workloads and services. It facilitates both automation and declarative configuration. YAML, a data-serialization language frequently used for writing configuration files, is utilised in Kubernetes deployments and resources. Its advantage is that YAML files can be created and stored in a Git repository and all changes can be tracked and audited.

- Continuous deployment pipeline with no downtime: The objective of the pipeline is to perform a set of tasks that will deploy a fully tested and functional service or application to production. The need for frequent deployments is handled best by Kubernetes via its container orchestration mechanism.

- Easy rollbacks: The Kubernetes framework has a built-in rollback mechanism. When new code is ready to be pushed to a container, the new desired state is defined, and Kubernetes orchestrates creating new containers and removing existing ones. If a problem arises, the immutable nature of Kubernetes containers allows easy rollbacks to the previous state.

- On-demand infrastructure: Kubernetes, through the use of the configurations, can easily scale infrastructure up and down based on the resources needed to handle the workloads of the application. And it is elastic by nature.

- Run everywhere pipelines: With Kubernetes architecture, we can easily migrate Containers and pipelines to anywhere in the same cloud or all on-premises.

Containerization features

- Availability: Amazon Elastic Kubernetes Service (EKS) operates and scales the Kubernetes control plane across many AWS availability zones to offer high availability. As part of the Amazon Kubernetes Service cluster, application traffic is distributed to one or more containers (pods) that run the application as individual microservices. This approach to running containerized applications in Kubernetes provides a highly available infrastructure for the applications.

- Scalability: Amazon EKS makes it easy to run Kubernetes on AWS and on-premises. It automatically allows scaling of the number of cluster’s worker nodes to meet the application’s workload demands. As the application size increases, the EKS cluster can scale up the number of Kubernetes nodes.

- Resiliency: Amazon EKS is built into the Kubernetes architecture and its components are resilient by nature. Kubernetes components monitor and restart the containers (pods) if there is any issue. Combined with running multiple Kubernetes nodes, applications can tolerate a pod or node being unavailable.

Security and security threats in containers

Container security is an important part of a complete security assessment. It involves the practice of protecting the containerized environment and applications from potential risks and threats by implementing a combination of security policies and tools.

- Access and authorization exploits: Providing access to authorized users and blocking all other users accessing the platform. And encrypting K8’s configuration files (for example, web. config and appsettings.json), particularly in a containerized setup.

- Container image vulnerabilities: Security mechanism to prevent malicious attacks is the key.

Detecting code vulnerabilities, outdated packages, malicious code, and other harmful threats during the build stage can improve security dramatically.

Monitoring CI/CD pipelines, end-to-end

- Monitor health of the CI/CD build pipeline and set up cognitive, proactive alerts spanning various tools

- Assess performance and quality of deployments in a unified way across multiple tools

- Monitoring the pipeline performance and reporting issues combines Amazon Monitoring Service (CloudWatch) with Grafana for visual dashboards; or extending build pipeline monitoring to include application monitoring (Nagios) and container monitoring (Kubernetes).